A bot is a piece of software that is primarily used to automate particular operations so that they can be performed without additional human intervention. One of the primary advantages of using robots is their capacity to complete automated jobs significantly more quickly than humans.

This article will describe how bots function and their primary classifications.

How Do Bots Function?

Sets of algorithms are used to program bots to perform their assigned jobs. From interacting with humans to extracting data from a website, several types of bots are designed to do a wide variety of activities.

A chatbot can operate in a variety of ways, for instance. A rule-based bot will communicate with humans by presenting them with predefined alternatives, but an intellectually complex bot will utilize machine learning to learn and search for specific terms. These bots may also use pattern recognition or natural language processing technologies (NLP).

Bots do not utilize a mouse or click-on material in a conventional web browser for obvious reasons. They normally do not utilize web browsers to access the internet. Instead, bots are software programs that, among other functions, send HTTP queries and typically utilize a headless browser.

Types Of Bots

There are numerous types of bots performing diverse duties on the internet. Some of them are legitimate, while others have nefarious intentions. Let’s examine the primary ones to gain a better knowledge of the bots’ ecology.

Web Crawlers

Web crawlers, commonly referred to as web spiders or spider bots, crawl the web for content. These bots assist search engines in crawling, cataloging, and indexing web pages so they may efficiently provide their services. Crawlers retrieve HTML, CSS, JavaScript, and pictures in order to process the website’s content. Website owners may install a robots.txt file in the server’s root directory to instruct bots on which pages to crawl.

Observing Bots

Site monitoring bots monitor system status, including loading times. This enables website owners to spot potential problems and enhance the user experience.

Scraping Bots

Web scraping bots are similar to crawlers, but they are designed to scan publicly accessible data from websites to extract specific data points, such as real estate data, etc. Such information could be utilized for research, ad verification, brand protection, and other objectives.

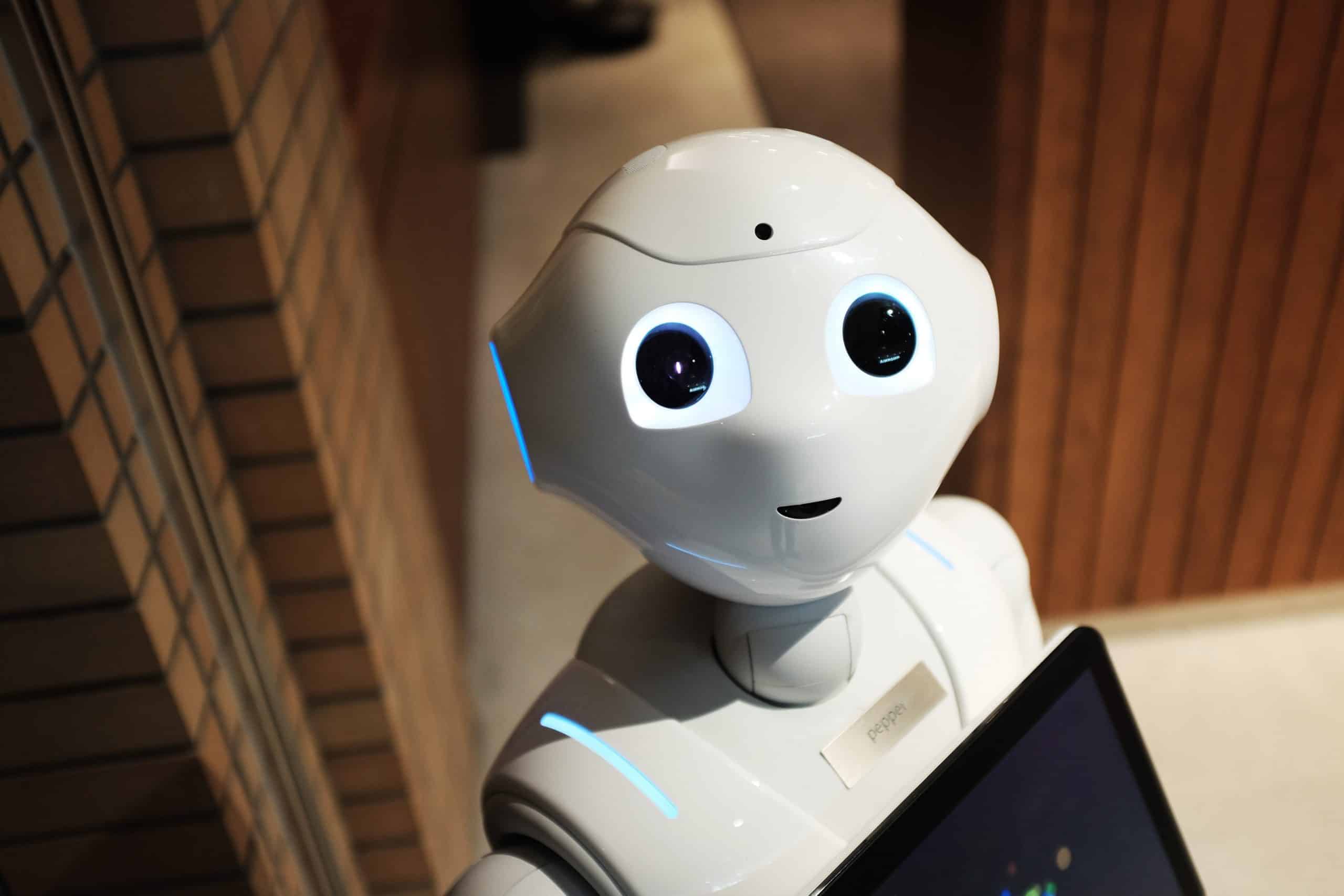

Chatbots

As previously said, chatbots can replicate human interactions and respond to users with predetermined sentences. Eliza, one of the most renowned chatbots, was founded in 1963, before the web. It purported to be a psychotherapist and transformed the majority of user utterances into questions based on particular keywords. Currently, the majority of chatbots use a combination of scripts and Machine Learning.

Spam Bots

Spammers may also conduct more hostile attacks, such as credential cracking and phishing.

Download Spyware

Download bots are used to automate multiple software application downloads in order to boost app store statistics and popularity.

DoS or DDoS Bots

DoS and DDoS bots are meant to bring websites down. An overwhelming number of bots assault and overload a server, preventing the service from functioning and compromising its security.

Final Thoughts

As bot technologies continue to evolve, website owners deploy sophisticated anti-bot safeguards. This presents a new obstacle for web scrapers who are blocked by collecting public data for science, market research, ad verification, etc. Fortunately, The Social Proxy offers various successful, efficient, and block-free web scraping options.