const request = require('request');

const csv = require('csv-writer').createObjectCsvWriter;

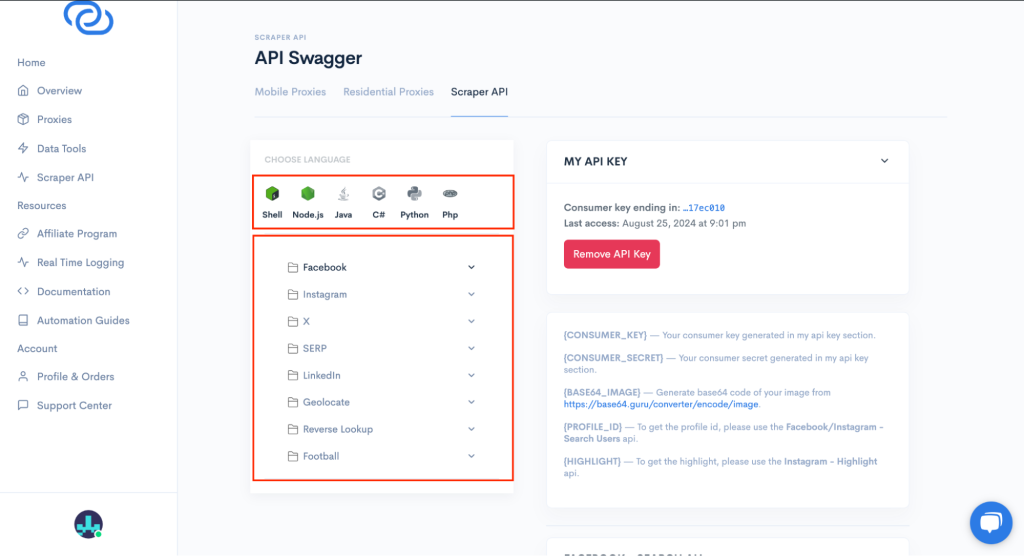

// Function to scrape Facebook

function scrapeFacebook(callback) {

const options = {

method: 'POST',

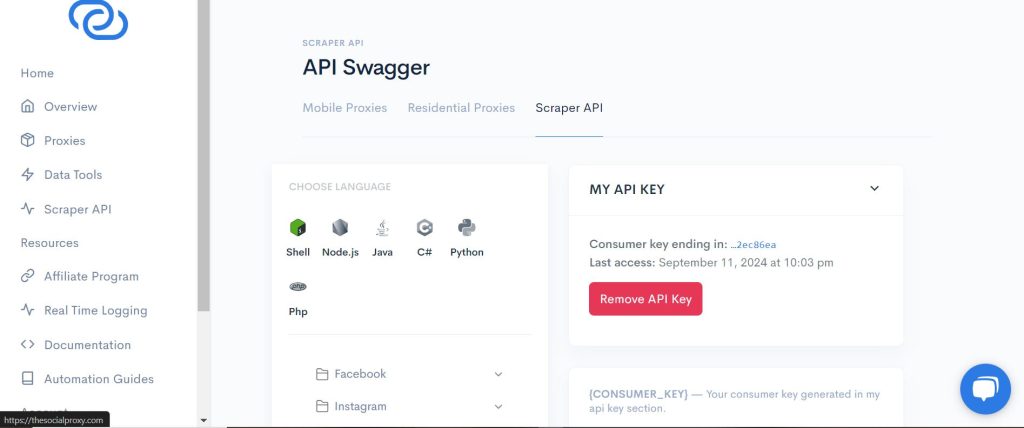

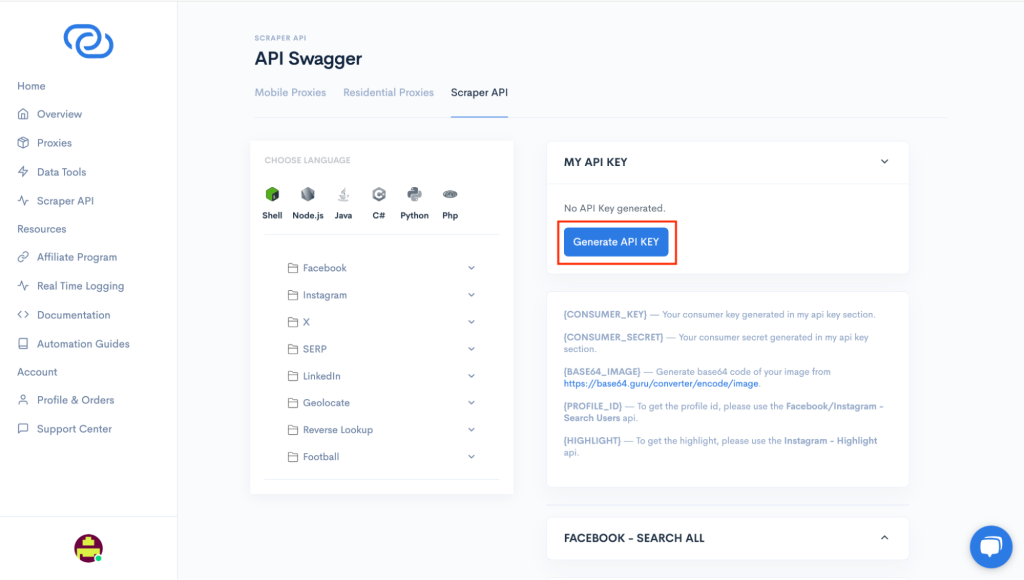

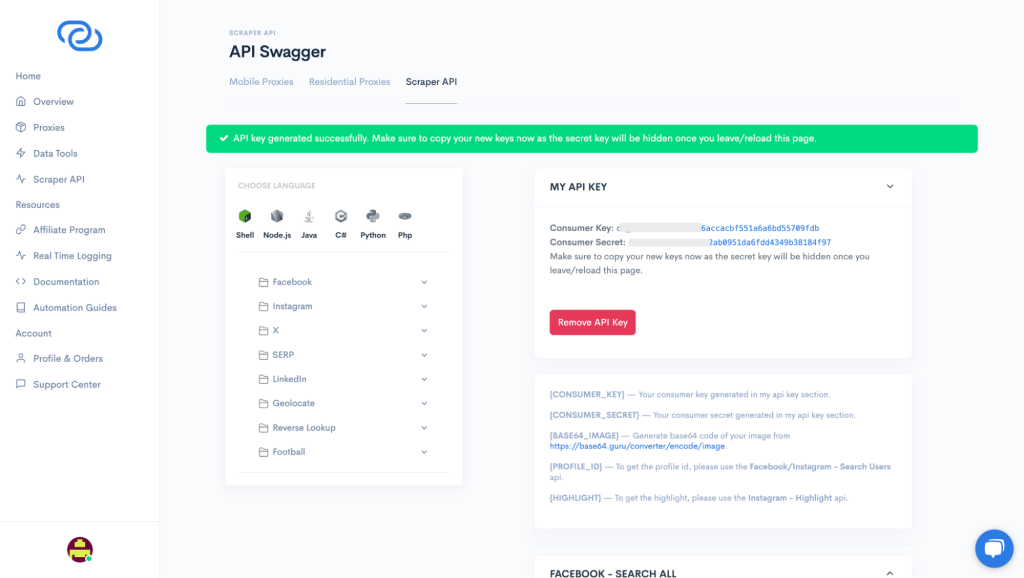

url: 'https://thesocialproxy.com/wp-json/tsp/facebook/v1/search/posts?consumer_key={CONSUMER_KEY}&consumer_secret={CONSUMER_SECRET}',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({

typed_query: 'WooCommerce review',

start_date: '2024-08-15',

end_date: '2024-08-24',

}),

};

request(options, function (error, response) {

if (error) throw new Error(error);

try {

const data = JSON.parse(response.body);

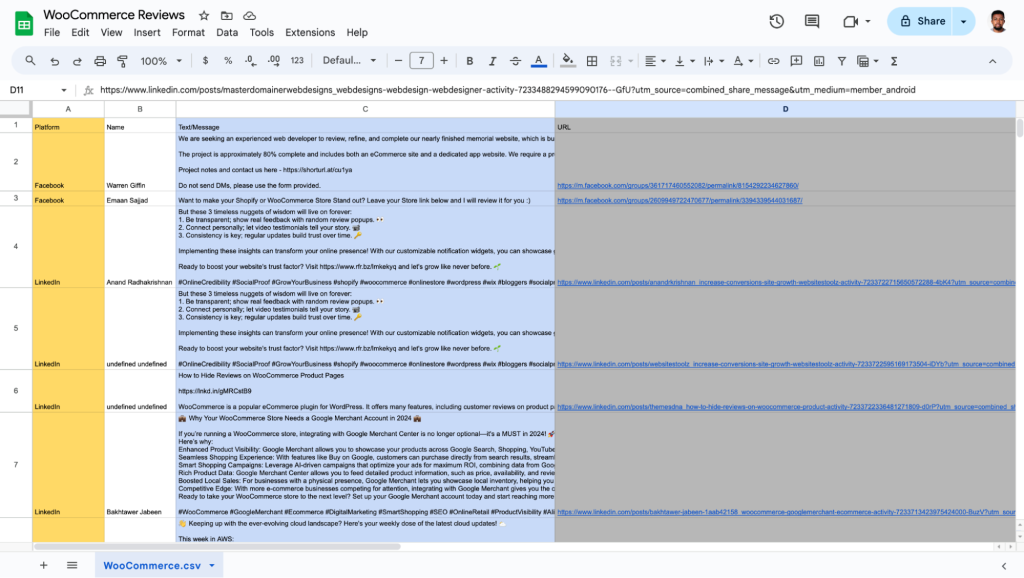

const results = data.data.results.map((result) => ({

platform: 'Facebook',

name: result.actors[0].name,

text: result.message,

url: result.url,

}));

callback(null, results);

} catch (parseError) {

callback(parseError);

}

});

}

// Function to scrape LinkedIn

function scrapeLinkedIn(callback) {

const options = {

method: 'GET',

url: 'https://thesocialproxy.com/wp-json/tsp/linkedin/v1/search/posts?consumer_key={CONSUMER_KEY}&consumer_secret={CONSUMER_SECRET}&keywords=WooCommerce review',

headers: {

'Content-Type': 'application/json',

},

};

request(options, function (error, response) {

if (error) throw new Error(error);

try {

const data = JSON.parse(response.body);

const results = data.data.posts.map((post) => ({

platform: 'LinkedIn',

name: post.author.first_name + ' ' + post.author.last_name,

text: post.text,

url: post.url,

}));

callback(null, results);

} catch (parseError) {

callback(parseError);

}

});

}

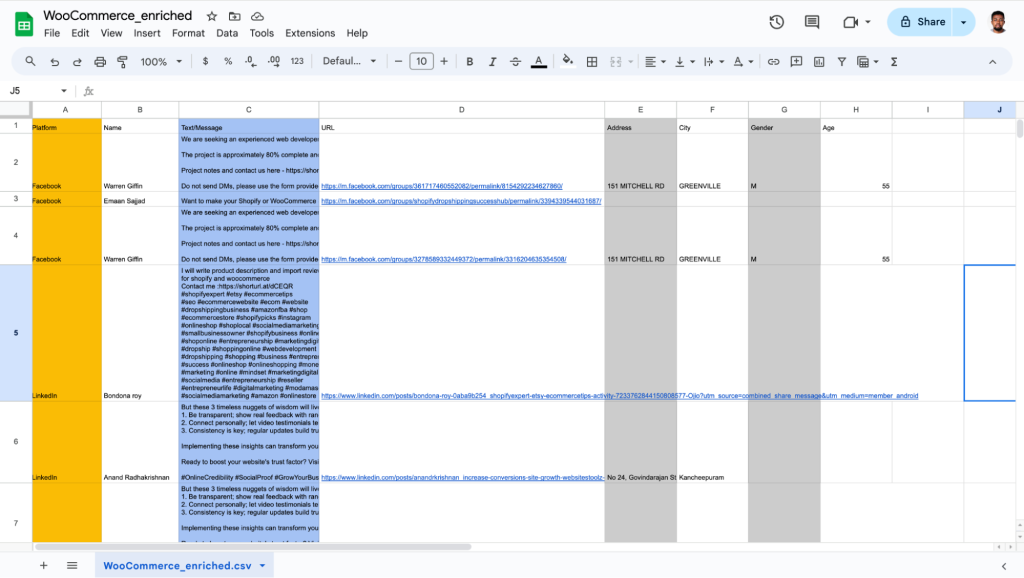

// Function to save results to CSV

function saveToCSV(data) {

const csvWriter = csv({

path: 'WooCommerce.csv',

header: [

{ id: 'platform', title: 'Platform' },

{ id: 'name', title: 'Name' },

{ id: 'text', title: 'Text/Message' },

{ id: 'url', title: 'URL' },

],

});

csvWriter

.writeRecords(data)

.then(() => console.log('CSV file has been written successfully'));

}

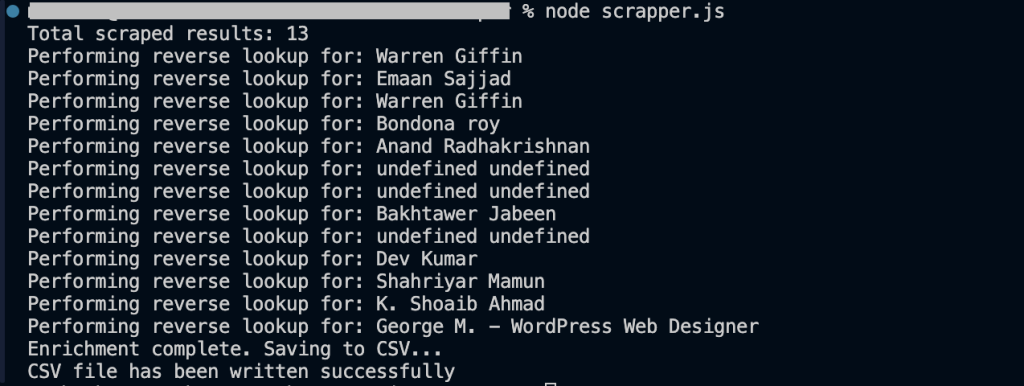

// Main function to run both scrapes and combine results

function reviewScraper() {

scrapeFacebook((fbError, fbResults) => {

if (fbError) {

console.error('Facebook scraping error:', fbError);

return;

}

scrapeLinkedIn((liError, liResults) => {

if (liError) {

console.error('LinkedIn scraping error:', liError);

return;

}

const combinedResults = [...fbResults, ...liResults];

saveToCSV(combinedResults);

});

});

}

// Run the scrapes

reviewScraper();