Unlimited IP Pool

- Login

- Dashboard

- Start Free Trial

- Proxiesnew

-

-

-

Cost Effective IP Pool

Cost Effective IP Pool

-

-

-

- AI Scrapers

-

- Solutions

-

-

-

Data Sourcing for LLMs & ML

Accelerate ventures securely

Proxy selection for complex cases

Some other kind of copy

Protect your brand on the web

Reduce ad fraud risks

-

-

-

- Pricing

- |

- Resources

-

-

Resources menu

-

-

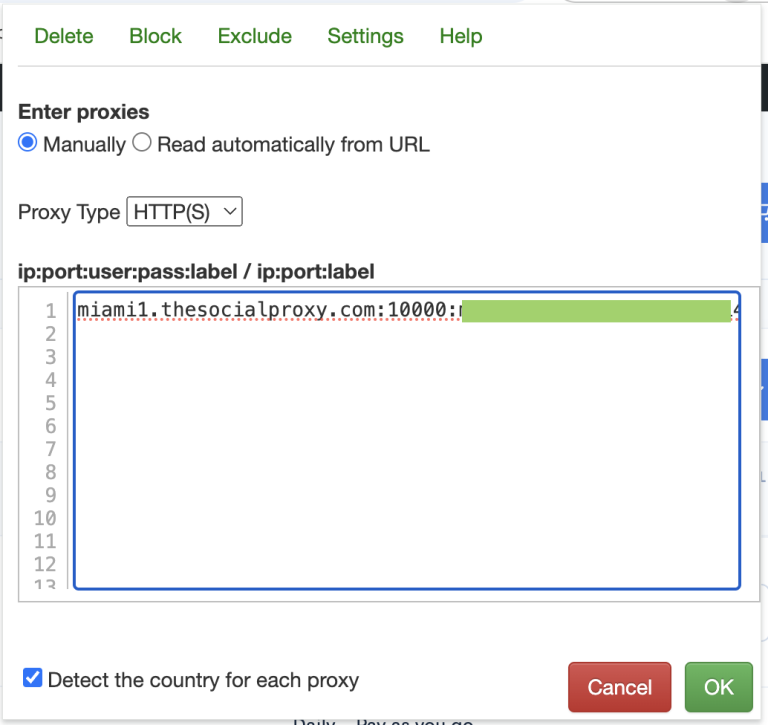

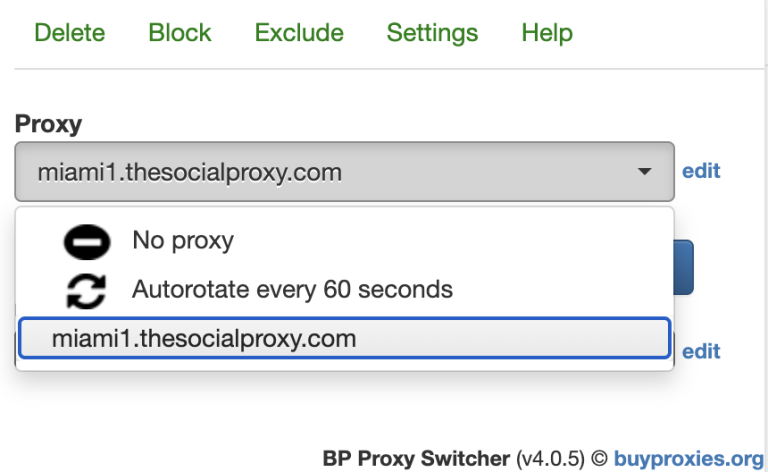

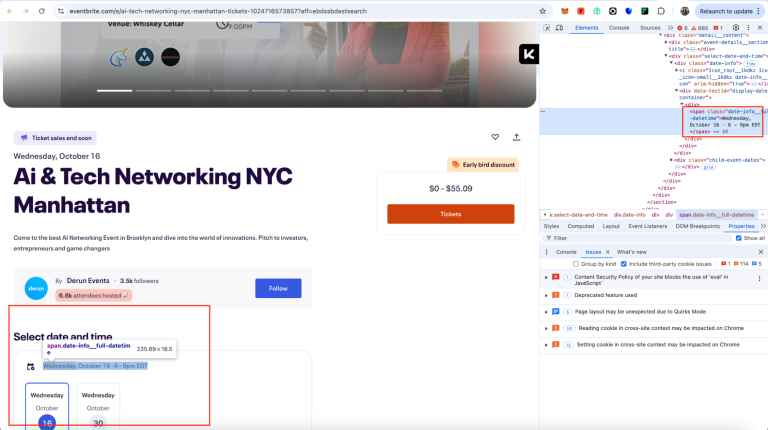

- Quick Start Guides