How to Scrape Reviews from Google Business (Google Maps)

The Social Proxy Team

Customer feedback is critical in order to note which products your customers love and which products they didn’t. That’s why Google Business reviews are a goldmine. They provide direct insights into customer opinion, expectations, and service quality. Businesses can use this information to identify trends, understand customer pain points, and improve competitive positioning.

In this tutorial, you’ll learn how to use NodeJS and Puppeteer to extract reviews from Google Business listings in Google Maps. You’ll also leverage The Social Proxy’s mobile proxy to overcome Google’s anti-scraping defenses and then analyze the extracted data to uncover trends, such as recurring complaints or common praises (if any). Finally, we’ll demonstrate how to export the scraped data into a CSV file for further analysis.

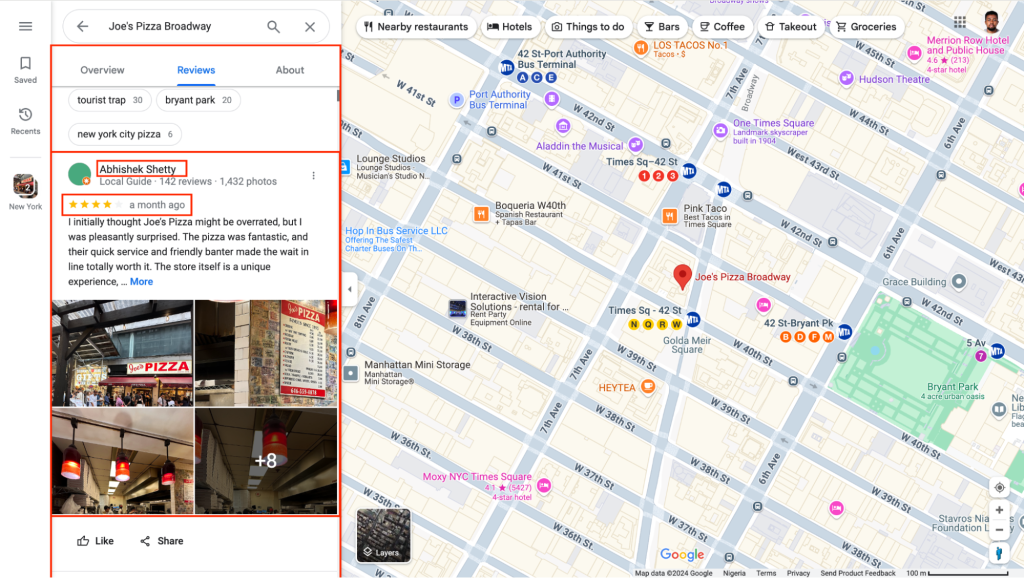

Understanding the Google Maps structure

When scraping reviews from Google Business (Google Maps), it’s important to understand how the review data is structured within the HTML of the webpage. Each website is structured in a way that allows you to target various HTML elements for scraping. You can inspect the web page elements using the browser developer tools in order to determine which element to target.

In this tutorial, you’ll need to identify the parent div that contains all the reviews and the card for each review. Inside the review card, you will find the elements that contain the review, reviewer name, stars, and timestamps.

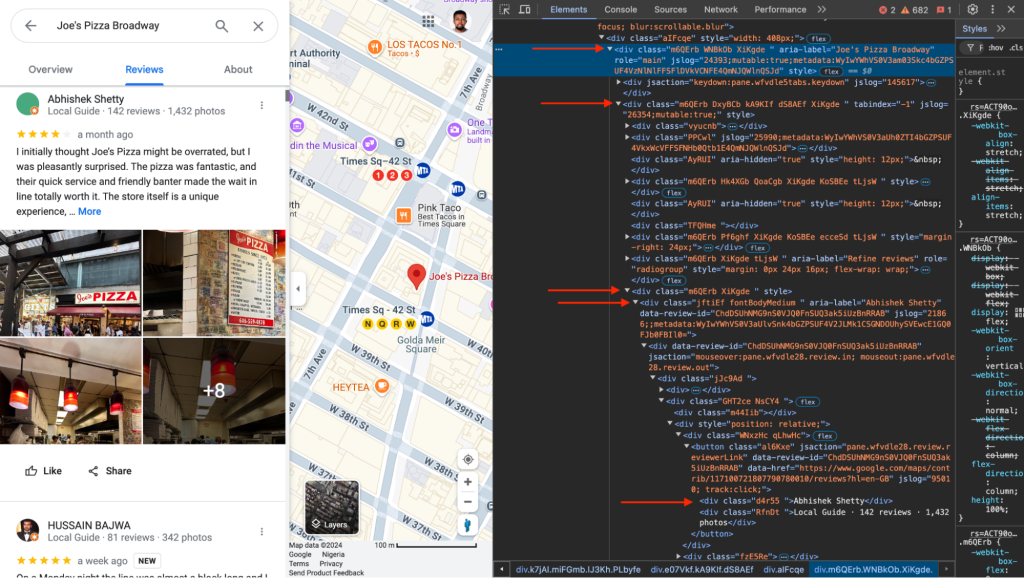

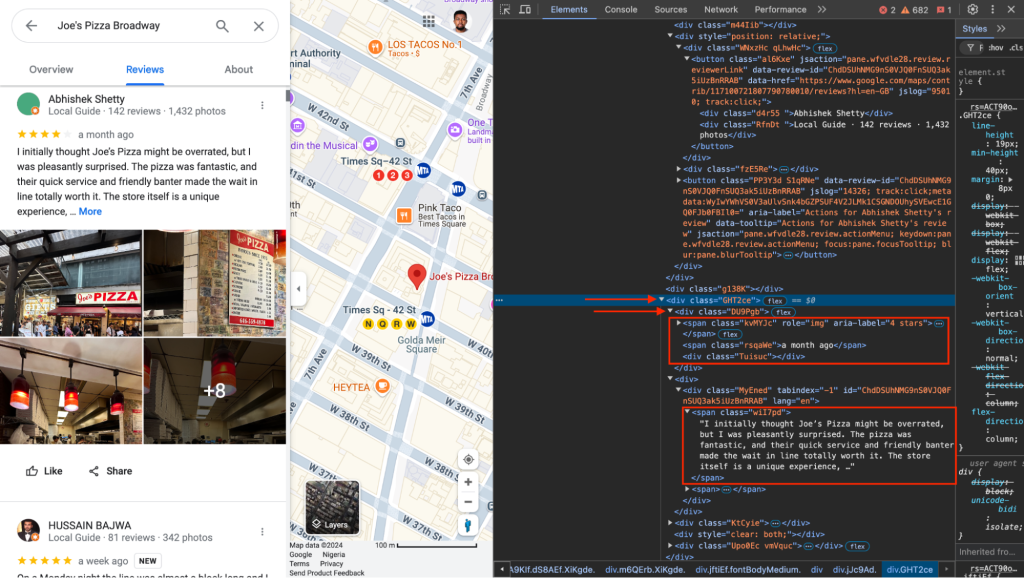

From inspecting the web page, you can see that the parent container is a div with a WNBkOb class, while the others are:

- Review container: The reviews are enclosed in a div element with a .XiKgde class

- Review card: The review is in a div card with a .jftiEf.fontBodyMedium class

- Review content: The text of the review is enclosed in a div element with a .wiI7pd class.

- Reviewer name: The reviewer’s name is stored in a div element with a .d4r55 class name.

- Star rating: The stars are in a span element with a .kvMYJc class name.

- Review date: The date is in a span element with a .rsqaWe class name

A step-by-step guide to scraping Google Business reviews using The Social Proxy

Step 1: Set up the environment

Before scraping reviews, you need to set up a suitable environment. This can be done using web scraping tools like Puppeteer and e Node.js packages. Here’s a general process:

- Install Puppeteer by running npm install puppeteer.

- Install the required Node.js packages such as fs (File System) to save data.

Next, follow these instructions to get your mobile proxy credentials from The Social Proxy.

Step 2: Write the script and extract the review data

Once your environment is set up, you can write the script to scrape Google Business reviews:

const puppeteer = require('puppeteer');

const fs = require('fs');

const path = require('path');

// Proxy configuration

const proxyHost = 'your-proxy-host'; // Replace with your proxy host

const proxyPort = 'your-proxy-port'; // Replace with your proxy port

const proxyUsername = 'your-username'; // Replace with your proxy username

const proxyPassword = 'your-password'; // Replace with your proxy password

(async () => {

try {

const url =

"https://www.google.com/maps/place/Joe's+Pizza+Broadway/@40.7546795,-73.989604,17z/data=!3m1!5s0x89c259ab3e91ed73:0x4074c4cfa25e210b!4m8!3m7!1s0x89c259ab3c1ef289:0x3b67a41175949f55!8m2!3d40.7546795!4d-73.9870291!9m1!1b1!16s%2Fg%2F11bw4ws2mt?authuser=0&hl=en&entry=ttu&g_ep=EgoyMDI0MTAwMS4wIKXMDSoASAFQAw%3D%3D";

const browser = await puppeteer.launch({

headless: false,

args: [

'--window-size=1920,1080',

`--proxy-server=http://${proxyHost}:${proxyPort}`

],

});

const page = await browser.newPage();

// Set proxy authentication

await page.authenticate({

username: proxyUsername,

password: proxyPassword

});

await page.setViewport({ width: 1920, height: 1080 });

console.log('Navigating to page...');

await page.goto(url, { waitUntil: 'networkidle2', timeout: 60000 });

// Wait for reviews to load

console.log('Waiting for reviews to load...');

try {

await page.waitForSelector('.jftiEf.fontBodyMedium', { timeout: 10000 });

} catch (error) {

console.error('Could not find any reviews on the page');

await browser.close();

return;

}

console.log('Starting to scroll for reviews...');

const totalReviews = await autoScroll(page);

console.log(`Finished scrolling. Found ${totalReviews} reviews.`);

const reviews = await page.evaluate(() => {

const reviewCards = document.querySelectorAll('.jftiEf.fontBodyMedium');

const reviewData = [];

reviewCards.forEach((card) => {

const reviewerName = card.querySelector('.d4r55')?.innerText || null;

const reviewText =

card.querySelector('.wiI7pd')?.innerText || 'No review content';

const reviewDate = card.querySelector('.rsqaWe')?.innerText || null;

const starRatingElement = card.querySelector('.kvMYJc');

let starRating = 0;

if (starRatingElement && starRatingElement.getAttribute('aria-label')) {

const ratingText = starRatingElement.getAttribute('aria-label');

const match = ratingText.match(/(\d+)\s+stars/);

if (match && match[1]) {

starRating = parseInt(match[1], 10);

}

}

reviewData.push({

reviewerName,

reviewText,

reviewDate,

starRating,

});

});

return reviewData;

});

console.log(`Successfully extracted ${reviews.length} reviews`);

Error handling

The mobile proxy from The Social Proxy is integrated into the script to ensure smooth scraping and prevent common errors like CAPTCHA, blocking, or IP bans.

In the code above, replace proxyHost, proxyPort, proxyUsername and proxyPassword with your own credentials.

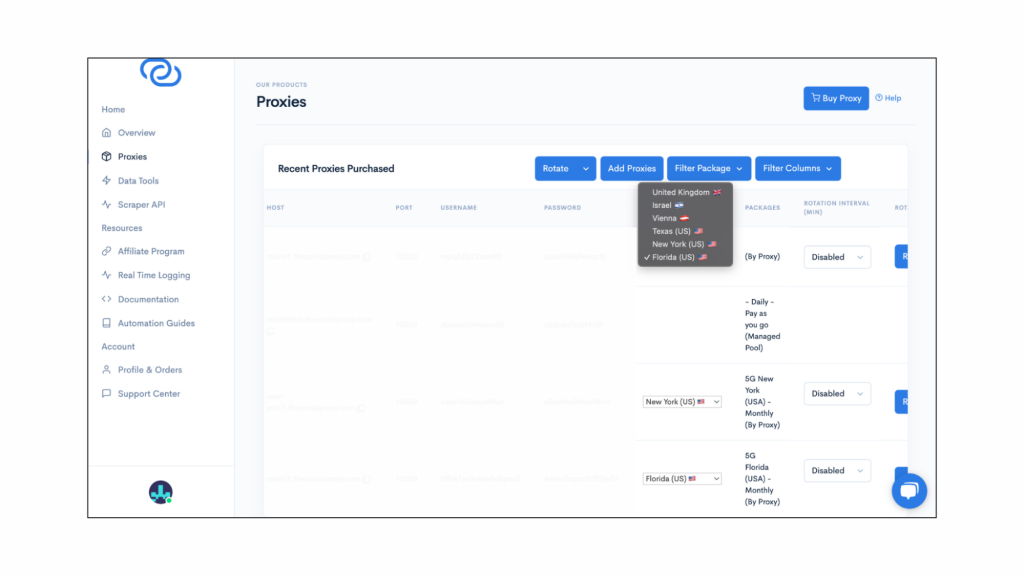

Proxy rotation

If you’re dealing with larger data sets, it’s important to frequently rotate proxies in order to prevent being blocked. You can use The Social Proxy to automate proxy rotation.

Step 3: Handling auto scroll

If you want to scrape many reviews, you’ll need to include an auto-scroll function in your script. Here’s how to auto-scroll and extract data:

// Function to create CSV string from reviews

function createCsvString(reviews) {

const headers = [

'Reviewer Name',

'Review Text',

'Review Date',

'Star Rating',

];

const csvRows = [headers];

reviews.forEach(review => {

// Clean up review text: remove newlines and commas

const cleanReviewText = review.reviewText

.replace(/\n/g, ' ') // Replace newlines with spaces

.replace(/,/g, ';'); // Replace commas with semicolons

csvRows.push([

review.reviewerName || '',

cleanReviewText || '',

review.reviewDate || '',

review.starRating || '',

]);

});

return csvRows.map(row => row.join(',')).join('\n');

}

async function autoScroll(page) {

return page.evaluate(async () => {

async function getScrollableElement() {

// Try different selectors

const selectors = [

'.DxyBCb [role="main"]', // Original selector

'.WNBkOb [role="main"]', // Broader main content selector

'.review-dialog-list', // Alternative review container

'.section-layout-root', // Even broader container

];

for (const selector of selectors) {

const element = document.querySelector(selector);

if (element) return element;

}

// If no selector works, try to find any scrollable element containing reviews

const possibleContainers = document.querySelectorAll('div');

for (const container of possibleContainers) {

if (

container.scrollHeight > container.clientHeight &&

container.querySelector('.jftiEf.fontBodyMedium')

) {

return container;

}

}

return null;

}

const scrollable = await getScrollableElement();

if (!scrollable) {

console.error('Could not find scrollable container');

return 0;

}

const getScrollHeight = () => {

const reviews = document.querySelectorAll('.jftiEf.fontBodyMedium');

return reviews.length;

};

let lastHeight = getScrollHeight();

let noChangeCount = 0;

const maxTries = 10; // Stop after 10 attempts with no new reviews

while (noChangeCount < maxTries) {

if (scrollable.scrollTo) {

scrollable.scrollTo(0, scrollable.scrollHeight);

} else {

scrollable.scrollTop = scrollable.scrollHeight;

}

await new Promise((resolve) => setTimeout(resolve, 2000));

const newHeight = getScrollHeight();

if (newHeight === lastHeight) {

noChangeCount++;

} else {

noChangeCount = 0;

lastHeight = newHeight;

}

}

return lastHeight;

});

}

The initial script only scrapes the reviews on the first page, so make sure to include this auto-scrolling functionality to scrape reviews across all pages.

Step 4: Store and analyze data

After scraping, store the review data in a CSV file for further analysis:

// Function to create CSV string from reviews

function createCsvString(reviews) {

const headers = [

'Reviewer Name',

'Review Text',

'Review Date',

'Star Rating',

];

const csvRows = [headers];

reviews.forEach(review => {

// Clean up review text: remove newlines and commas

const cleanReviewText = review.reviewText

.replace(/\n/g, ' ') // Replace newlines with spaces

.replace(/,/g, ';'); // Replace commas with semicolons

csvRows.push([

review.reviewerName || '',

cleanReviewText || '',

review.reviewDate || '',

review.starRating || '',

]);

});

return csvRows.map(row => row.join(',')).join('\n');

}

(async () => {

....paste the rest code here

// Create CSV string

const csvString = createCsvString(reviews);

// Generate filename with timestamp

const timestamp = new Date().toISOString().replace(/[:.]/g, '-');

const filename = `reviews_${timestamp}.csv`;

// Save to file

fs.writeFileSync(filename, csvString);

console.log(`Reviews saved to ${filename}`);

// Also log to console

console.log('First few reviews:', reviews.slice(0, 3));

await browser.close();

} catch (error) {

console.error('An error occurred:', error);

}

})();

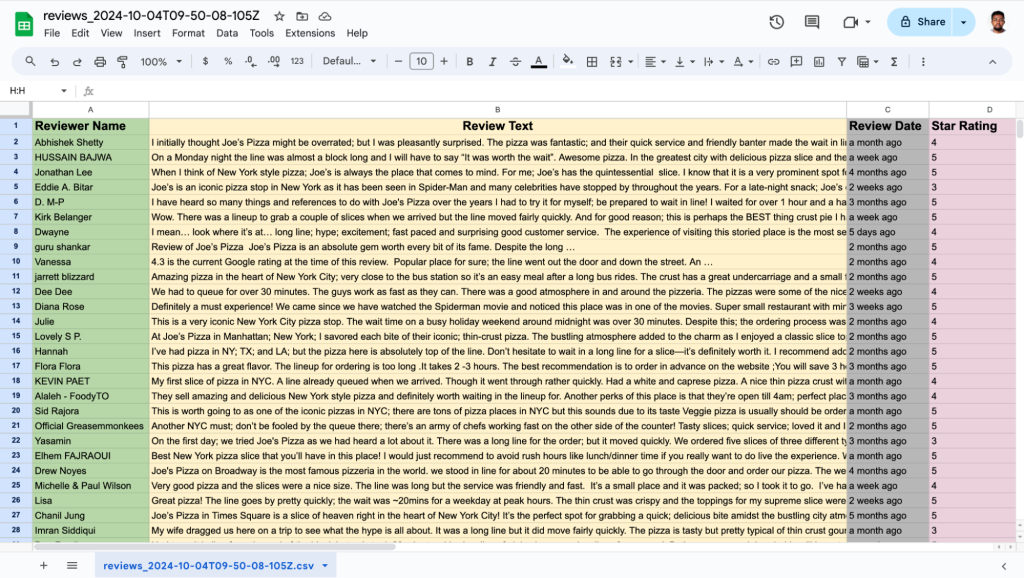

This step saves the extracted review data in CSV format, which can be opened in an Excel sheet. We scraped over 360 reviews.

Now that you have these reviews, you can use them to leverage the information contained in multiple use cases. You can perform a sentiment analysis to gauge customer satisfaction and identify trends that can be used to help businesses understand their stronger and weaker areas. By analyzing keyword frequency and common themes within reviews, businesses can pinpoint specific pain points, such as service issues or product complaints, and target their actions accordingly. Star rating distribution and time series analysis also make it easy to track customer satisfaction over time and identify whether changes (like new services or menus) have improved the customer experience.

Another significant use case is competitive analysis, in which businesses can compare their reviews to those of their competitors and identify areas where they excel or fall short. Finally, by leveraging customer feedback, businesses can prioritize product or service improvements, address the most frequent concerns, and enhance customer satisfaction based on real-time, user-generated insights.

Conclusion

In this tutorial, we demonstrated how to scrape Google Business reviews from Google Maps for the keyword “Pizza in New York” using NodeJS, Puppeteer, and The Social Proxy’s mobile proxy to bypass Google’s anti-scraping mechanisms. You learned how to target essential HTML elements, such as review content, reviewer names, timestamps, and star ratings to extract valuable review data.

With the data you’ve collected, the possibilities for further exploration are endless. You can use the reviews to conduct market analysis, gauge customer sentiment, or identify gaps in competitor services. To go even further, consider extending your scraper to other regions or categories. The same techniques can be used to scrape reviews from any business type, whether it’s for restaurants, hotels, or other services across different cities and countries.

FAQs about scraping Google Business reviews

How do I extract Google business reviews?

You can use tools like Python or Nodejs with Selenium/Puppeteer to automate the extraction of reviews from Google Business pages. To bypass Google’s anti-scraping defenses, use The Social Proxy’s mobile proxy to mimic real users and avoid detection.

Are you allowed to scrape Google reviews?

Scraping Google reviews without permission may violate Google’s Terms of Service. Make sure you’re familiar with its policies before scraping. For compliant data access, make sure you are using proxy providers like The Social Proxy or the Google Places API that adhere to data regulations and source data ethically.

How do I scrape Google Maps business data?

Web scraping tools like BeautifulSoup or Selenium/Puppeteer can extract business names, addresses, and reviews from Google Maps. Using mobile proxies that rotate IP addresses is crucial in order to avoid getting detected and blocked by Google’s anti-scraping measures.

How do I get Google Maps reviews?

For large-scale data extraction, you can scrape reviews directly using scraping tools and mobile proxies. You can also use the Google Places API to legally access review data in a reliable way. Keep in mind that scraping allows for more customization.